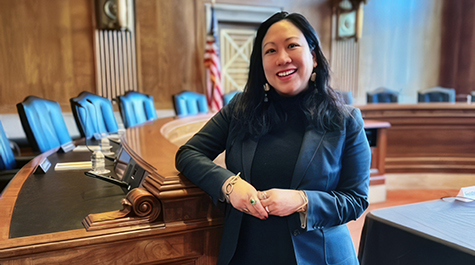

William & Mary Law Professor Margaret Hu Serves as Expert Witness on AI Before U.S. Senate Committee

Margaret Hu, the Taylor Reveley Research Professor, Professor of Law and Director of the Digital Democracy Lab at William & Mary Law School, testified before the U.S. Senate Committee on Homeland Security and Governmental Affairs on Nov. 8.

The hearing addressed “The Philosophy of AI: Learning from History, Shaping Our Future.”

Posing the question, “What does the future of AI governance look like?” the hearing explored the philosophical, ethical and historical aspects of the future of regulating AI, specifically focusing on the possibilities and challenges of legal solutions and AI technologies.

“The reason we must consider the philosophy of AI is because we are at a critical juncture in history,” said Hu. “We are faced with a decision: either the law governs AI or AI governs the law.”

In addition to her work at William & Mary Law School, Hu is also a faculty research affiliate with Data Science and William & Mary’s Global Research Institute. Data and democracy are two of the four core initiatives of the university’s Vision 2026 strategic plan.

In opening remarks, Committee Chairman Gary Peters (D-MI) explained that regulatory oversight of AI and emerging technologies is necessary because technological disruptions reflect “moments in history” that have “affected our politics, influenced our culture and changed the fabric of our society.”

Joining Hu in offering expert testimony were Shannon Vallor, professor of ethics and philosophy at the University of Edinburgh, and Daron Acemoglu, professor of economics at MIT.

Acemoglu discussed the need to shape a future of AI where the technologies worked to support the worker, the citizen and democratic governments. He explained that the asymmetric privatization of AI research and development skewed the technology in the direction of serving corporate profit rather than benefiting the user of the technology.

Vallor pointed out that “AI is a mirror,” reflecting back historical biases and discrimination that have persisted over generations. As a result, she stated that the technology also reflected a point of view and should not be viewed as objective or neutral.

In her testimony, Hu shared that regulating AI systems effectively means that policymakers and lawmakers should not view AI oversight in purely literal terms. She explained that AI systems are not simply literal technical components of technologies. Instead, because AI is infused with philosophies such as epistemology and ontology, it should be seen as reflecting philosophical aims and ambitions.

Generative AI and machine learning, algorithmic and other AI systems “digest collective narratives” and can reflect “existing hierarchies,” Hu explained. AI systems can adopt results “based on preexisting historical, philosophical, political and socioeconomic structures.” Acknowledging this at the outset allows for visualization of what is at stake and how AI may perpetuate inequities and antidemocratic values.

In the past few years, billions of dollars have been invested in private AI companies, fueling the urgency for a dialogue on rights-based AI governance. Hu’s opening statement encouraged the senators to consider placing the philosophy of AI side-by-side with the philosophy of the Constitution to ask if they are consistent with one another. Hu relied upon her expertise as a constitutional law scholar and teacher to describe the need to investigate how we understand the philosophical underpinnings of Constitutional Law as a method to enshrine rights and constrain power.

AI must be viewed in much the same way, Hu explained. Both the Constitution and AI are highly philosophical; putting them side-by-side allows for an understanding of how they might be in tension with each other on a philosophical level.

AI must be viewed in much the same way, Hu explained. Both the Constitution and AI are highly philosophical; putting them side-by-side allows for an understanding of how they might be in tension with each other on a philosophical level.

The humanities and philosophies that have underscored our “analogue democracy” must serve as our guide in a “digital democracy,” Hu said. Viewing AI too literally as only a technology runs the risk of not fully grasping its impact as a potential challenge facing society.

Hu’s testimony invited the question of whether AI will be applied in a way that is consistent with constitutional philosophy, or will it alter it, erode it or mediate it.

AI is not only a knowledge structure, but a power and market structure, Hu pointed out during her testimony. With AI already being deployed for governance purposes, Hu noted that we are at a crossroads where we must grapple with whether and to what extent constitutional rights and core governance functions were meant to be mediated through commercial enterprises and AI technologies in this way. As the capacities of AI evolve, several risks will grow exponentially and more rapidly than can be anticipated.

Hu said that when a philosophy like a constitutional democracy can speak to a philosophy of AI, it is easier to comprehend how AI as a governing philosophy might attempt to rival or compete with the governing philosophy of a democracy. History demonstrates that the law can be bent and contorted, especially when structures of power evolve into an ideology.

At the end of her testimony, Hu made the case that the foundational principles of a constitutional democracy provide a touchstone for analysis.

“I return to my opening question of whether the law will govern AI or AI will govern the law,” Hu said. “To preserve and reinforce a constitutional democracy, there is only one answer to this question. The law must govern AI.”

To watch the hearing, please visit the Homeland Security and Governmental Affairs page.

Editor’s note: Data and democracy are two of four cornerstone initiatives in William & Mary’s Vision 2026 strategic plan. Visit the Vision 2026 website to learn more.